|

|

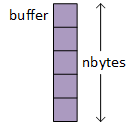

In one sense, block I/O operations are simple, requiring only an address in primary or main memory and the number of bytes to transfer. However, the block read and write functions often rely on auxiliary operations or concepts, leading to potentially confusing syntax. The address is where the read function stores the input data and where the write function takes the output data. Furthermore, the block I/O operations are simple in the sense that they don't modify or interpret the data in any way - they only move it as a stream of bytes between the program and a file. Although the first parameter is a character pointer, the functions can operate on all data with appropriate typecasts.

|

|

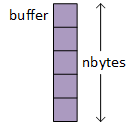

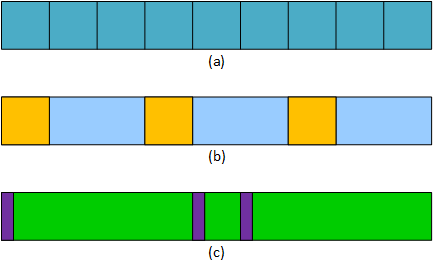

Block I/O is flexible, powerful, and a mainstay of database computing. However, programs typically perform block I/O in binary mode, making moving the data between dissimilar systems challenging. Even on the same computer, different compiler settings can make data written by one program difficult to read by another. The differences between various data representations often make it necessary to programmatically "massage" data when transferring it between systems. Alternatively, programs can export the data to more portable formats, such as the comma-separated values or CSV format used by many spreadsheet and database programs, and import it on another system. Fortunately, moving binary data between incompatible systems happens infrequently.

Although the read and write functions' first parameter is a character pointer, they treat the data as typeless. When they read or write data, they process it as a stream or sequence of bytes, making its type irrelevant. So, with a combination of the address of operator, typecasting, and the sizeof operator, the block I/O functions can support virtually any kind of data.

ifstream in(in_name, ios::binary); ofstream out(out_name, ios::binary);

int counter; |

out.write((char *) &counter, sizeof(int));

in.read((char *) & counter, sizeof(int)); |

while (in.read((char *) &counter, sizeof(int)))

... |

struct foo { . . . };

foo my_foo; |

out.write((char *) &my_foo, sizeof(foo)); in.read((char *) &my_foo, sizeof(foo)); |

while (in.read((char *) &my_foo, sizeof(foo)))

... |

double, float, or any fundamental type. Similarly, programmers can replace struct in the second row with class. However, while possible, reading and writing objects with pointer fields is quite challenging.

Programs typically control output or write operations based on external events, for example, a user choosing to end data input. Conversely, input or read operations typically loop, alternately reading and processing data until reaching the end of the file. The read function in the third column returns an input stream, and operator bool converts it to a Boolean value denoting the file's state, driving the loop.

int array[100]; |

for (int i = 0; i < 100; i++)

out.write((char *) &array[i], sizeof(int)); |

int i = 0; while (in.read((char *) &array[i], sizeof(int))) i++; // and additional processing |

struct foo { . . . };

foo my_foo[100]; |

for (int i = 0; i < 100; i++)

out.write((char *) &my_foo[i], sizeof(foo)); |

int i = 0; while (in.read((char *) &my_foo[i], sizeof(foo))) i++; // and additional processing |

while (in.read((char *) &my_foo[i++], sizeof(foo)))

Programs often use the index variable in two ways. First, as the term suggests, as an array index stepping through an array. However, subsequent operations often use it as a count of the number of objects read. b-rolodex2.cpp in the following section demonstrates a program doing this. The read function detects and reports reaching the end of a file by setting a stream's state flags on a failed read operation. Incrementing the index inside the read operation counts the failed read, resulting in an incorrect count.

int array[100]; |

out.write((char *) array, n * sizeof(int)); in.read((char *) array, n * sizeof(int)); |

struct foo { . . . };

foo my_foo[100]; |

out.write((char *) my_foo, n * sizeof(foo)); in.read((char *) my_foo, n * sizeof(foo)); |