Paradigms consist of "a set of assumptions, concepts, values, and practices that constitutes a way of viewing reality for the community that shares them, especially in an intellectual discipline." When applied to software development, paradigms guide how developers view a problem and organize its solution. Think of it as a language for describing a problem and designing and implementing a software solution. The term paradigm shift denotes "a fundamental change in [the] approach or underlying assumptions." Applied to software development, it means an abrupt change in the language between parts of the development process. Computer scientists have used various techniques to help manage the increasing complexity of software systems. They base each technique on a different paradigm, and each advancement caused or resulted in a paradigm shift.

To develop large, complex software systems, developers break the overall development process into smaller, more manageable steps or phases. Jurison1 notes that "The choice of the software development process has a significant influence on the project's success. The appropriate process can lead to faster completion, reduced cost, improved quality, and lower risk. The wrong process can lead to duplicated work efforts and schedule slips, and create continual management problems.".

Many software development processes have been proposed over time, each defining a specific set of phases or steps. There are typically some variations between the steps or how they are named, but three are common to most processes:

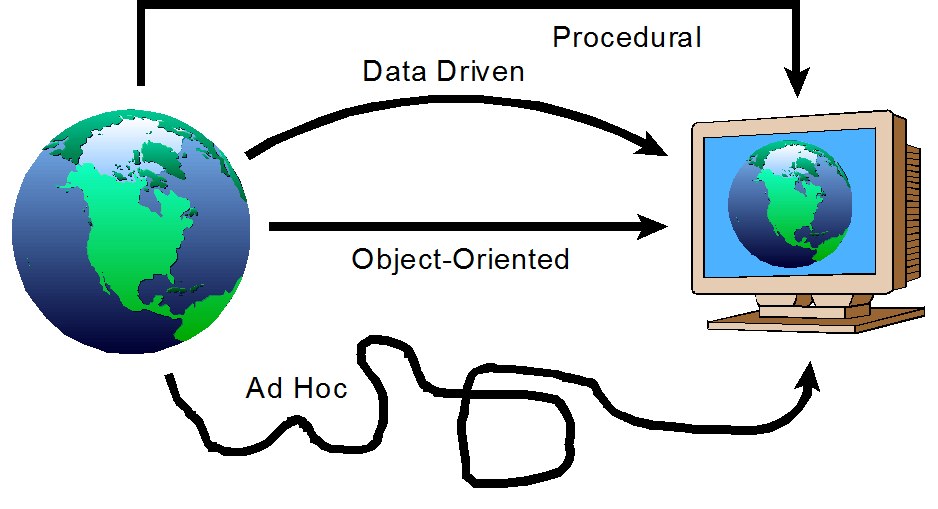

A single individual or group may complete all three phases for a small project. Increasingly large projects often require different groups with specialized skills to complete each phase. Although analysis and design are distinct phases with distinct goals, they typically use the same language or paradigm. So, the following figures illustrate them together for simplicity and compactness.

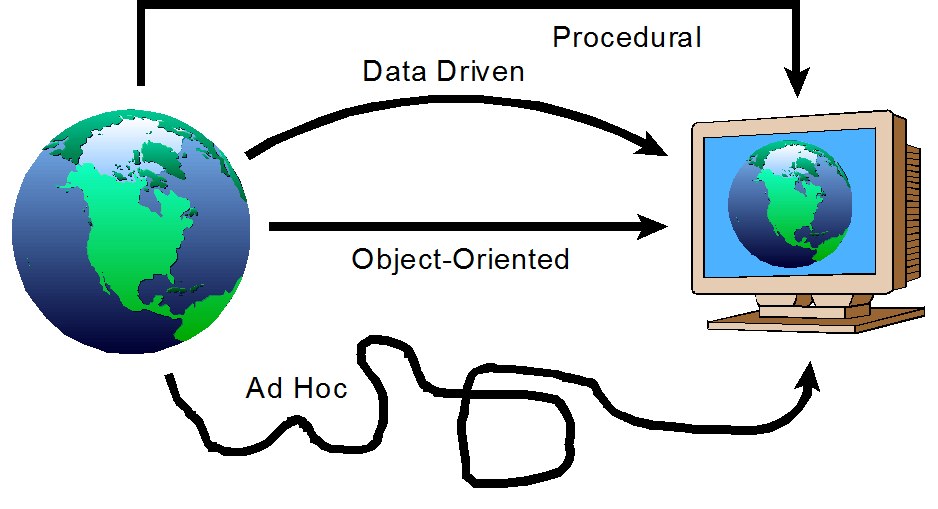

Historically, Software developers have experimented with three major software development paradigms: procedural, data-driven, and object-oriented. Before adopting formal design processes, developers utilized "organic," ad hoc techniques (that is, programs "grew" in a haphazard way). Figure 1 illustrates that each technique can lead to a working program, but not in the same amount of time or with the same amount of effort.

The procedural paradigm focuses on how to solve a problem. Software developers using this paradigm list the steps needed to solve the problem and then successively decompose those steps into smaller and more simple sub-problems, which they ultimately represent with procedures, functions, or methods. Analysts often represent a procedural decomposition as a hierarchy - a list or a tree. The top or root of the tree represents the overall problem, and the leaves at the bottom denote the final procedures or functions. The nodes or branching points between the top of the tree and the bottom represent intermediate functions called from the functions above and calling functions below. There are no well-defined rules for performing a decomposition or determining when the functions are sufficiently simple to allow programming to begin. The absence of these rules suggests that decompositions are somewhat arbitrary.

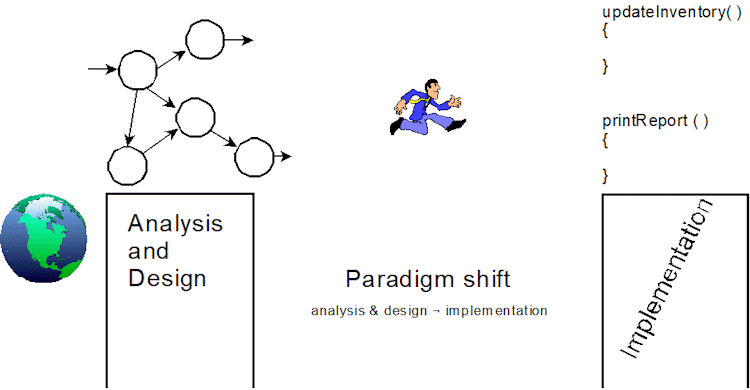

Analysts observe the "real world" and translate their observations into procedural models. Designers refine the models, adding missed features while removing unnecessary ones. The functions composing the hierarchical models correspond well, if not precisely, with the final functions programmers created during implementation. Nevertheless, the procedural paradigm suffers from a paradigm shift between the real world and the analysis and design model.

The real world lacks neatly bounded and organized problem-solving steps or procedures. So, analysts synthesize them from their observations. While software professionals might understand the connections or articulations between the real-world elements and the model functions, customers and professionals in other disciplines might not. For example, accountants, buyers, and customers may understand their respective roles in managing accounts receivable and payable, stocking a store's shelves, and buying an individual product. However, they may not recognize their role in a decomposed model or program. There is an abrupt change between the problem and the procedural decomposition. This abrupt change is a paradigm shift. Nevertheless, the procedural model is still appropriate for small, simple programs, and what we learn while studying it will carry over to our study of the object-oriented paradigm.

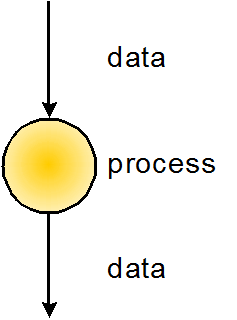

Software developers created and used numerous data-driven paradigms from the mid-1970s through the mid-1990s. Data-driven models follow data throughout the system. They begin following it when it enters the system, continue following it as it passes through transforming processes, and stop when it leaves. Analysis and design model the data flowing through the system as arrows entering and leaving process bubbles. Sometimes, multiple arrows entered a bubble where the system merged them into a single exiting flow; sometimes, a single flow entered, only to be separated into multiple flows leaving the process. The result was a web of data and processes that described a given problem.

An analysis based on a data-driven paradigm facilitates developer understanding of the problem domain, and the results are easier for non-professional stakeholders to understand. The data-driven models are also more useful than hierarchical models for software testing, validation, and documentation.

While a data flow's bubble-and-arrow diagrams match the real world, there is a large gap between the data flow diagrams and the programs written during the implementation phase. The gap is a paradigm shift resulting from changing the underlying language from data flows that focus on what to procedures that focus on how.

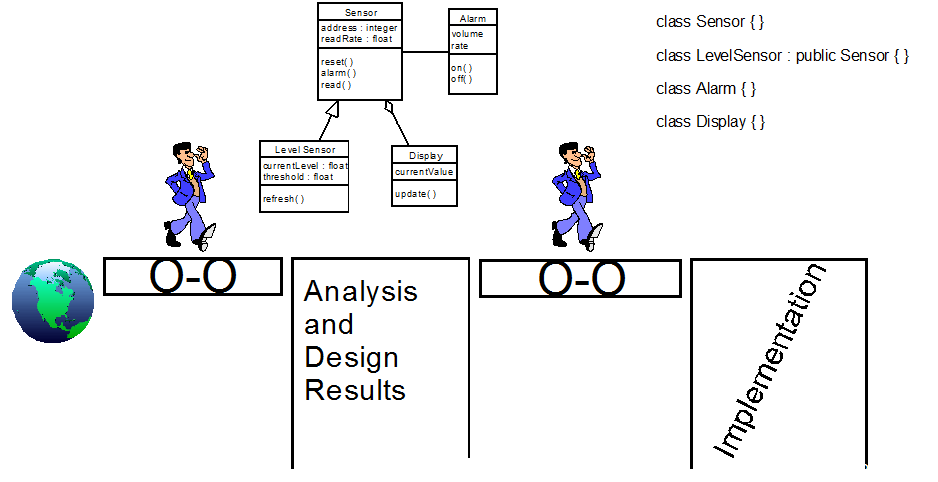

The object-oriented paradigm encapsulates data and the procedures or operations that may access and operate on that data into an entity called an object. In that way, the object-oriented paradigm simultaneously focuses on what and how. A class describes a group of similar objects. It is often easier for developers to understand classes and how they are connected using a graphical notation; the illustration below includes four classes arranged in a class diagram using the Unified Modeling Language (UML) notation. A common metaphor for classes and the objects created or instantiated from them is cookie cutter and cookies. A cookie-cutter determines a cookie's size, shape, and decorations, but we can't eat the cookie-cutter. But we can roll out some dough and stamp out as many cookies with the cookie cutter as we like. If we use the same cookie cutter, all cookies will be identical. A class is like a cookie-cutter, and the objects created or instantiated from it are like cookies: objects instantiated from the same class will also be the same.

Objects in the real world are identified during analysis and abstracted into classes. During the design phase, developers add detail to the classes, add new classes, and sometimes remove classes. Still, for the most part, software development carries the classes discovered during analysis into design. The design phase refines the classes and passes them into the implementation phase. When software developers implement the classes, they translate the UML class diagrams into an appropriate programming language. Significantly, the concepts of classes and objects do not change from one phase to another. Classes bring a conceptual consistency to software development that bridges the real world, analysis, design, and implementation.

The ability of the object-oriented paradigm to bridge the gaps between the phases, smoothing the development process, is only one of its many strengths. It retains the best characteristics of the procedural and data-driven paradigms while overcoming or minimizing their worst features. For example, the models created during analysis help developers understand the problem domain similarly to data-driven models because classes represent data. But classes also denote operations or procedures, so the transition from design to implementation is similar to the procedural paradigm. Furthermore, as each class defines a new, intermediate scope (the region of a program where a variable is visible and accessible), the object-oriented paradigm also allows some but not all of the procedures in a program to access the data. Controlling data access reduces the functional coupling that practically limits the size and complexity of software created with the procedural paradigm. The many strengths of the object-oriented paradigm make it the current best practice for creating large, complex software systems.

Java is a pure object-oriented language that only supports the object-oriented paradigm. For this reason, Java programs necessarily define everything, including library methods and symbolic constants, inside classes. Alternatively, C++ is a hybrid language supporting the procedural and object-oriented paradigms. C++ allows developers to choose the paradigm most appropriate for a given problem: the object-oriented paradigm for large, complex problems and the procedural paradigm for smaller, less complex problems.